Google MUVERA: Multi-Vector Search Algorithm That Changed SEO

seo

Google MUVERA is the first search overhaul that flips the script from “ranking tricks” to “retrievability science.”

Here’s the deal: instead of matching a single vector or a few keywords, Google’s multi-vector retrieval algorithm turns every idea on your page into dozens of tiny meaning coordinates.

Unlike single vector embeddings, multi-vector models represent each data point separately, compress them into a fixed-dimensional encoding signature, and then fetch the best passages in microseconds.

Because a multi-vector model compares passages using multi-vector similarity, not just token overlap, they surface meaning even when the wording differs.” Those vector embeddings live in a shared embedding space, which enables the retrieval of relevant documents based on a richer semantic representation.

So why should you care? Because this new Google MUVERA algorithm decides whether your article shows up for those complex queries bouncing around voice assistants, long-tail questions, and product research. It also reframes how search results are assembled for conversational queries and tail queries across devices.

In the next few minutes, you’ll see exactly how MUVERA rolled out, what “multi-vector” really means, and the step-by-step SEO strategy you need to stay visible.

We’ll unpack fixed-length vectors, maximum inner product search, all that jazz – without melting your brain, then translate it into an actionable playbook covering content clusters, technical SEO, page experience metrics, and more.

By the end, you’ll know how to write for users and the dual-encoder model behind MUVERA, dodge the pitfalls that sank your competitors, and future-proof your site for every search engine evolution headed our way.

SEO professionals can anticipate user needs and avoid keyword-focused strategies that no longer work in a retrieval-first world.

Let’s dive in.

MUVERA roll-out timeline and what to watch

The MUVERA update didn’t land overnight; Google rolled it out in three deliberate waves, so engineers and, yes, you could watch the ripple effects in real time.

Phase 1 (June – August 2025): MUVERA sat quietly in the background while Google A/B-tested a multi-vector approach for retrieval against the old single-vector setup. Only a sliver of queries, mostly long-tail and voice search, tapped the new system, but the gains in speed and relevance were impossible to miss inside Search Console if you looked closely. Internally, Google Research used this window to validate retrieval via fixed-dimensional signatures at scale.

Phase 2 (September – December 2025): When the algorithm graduated to “limited deployment.” Google lets MUVERA handle a broader slice of traffic, particularly complex informational searches and shopping lookups where nuance matters. Early logs showed MUVERA achieved markedly superior performance on IR tasks without ballooning costs.

Phase 3 in 2026: When Google MUVERA will become the retrieval brain for virtually every query. Once that switch is fully flipped, being indexed will no longer guarantee visibility; only pages that spark MUVERA’s multi-vector similarity scoring will enter the ranking stage. At that point, MUVERA enables retrieving the right passage first, and ranking finishes assembling the search results. In other words, this year is your last on-ramp to align structure, depth, and technical SEO before the new normal locks in.

What is Google MUVERA algorithm?

The Google MUVERA algorithm (short for Multi-Vector Retrieval Algorithm) lets Google store and then search multiple vectors of meaning for every page, but with the speed of a single-vector index.

At the center is a multi-vector model that encodes each passage so those meanings can be compared quickly. This multi-vector retrieval architecture is now the reference design for multi-vector retrieval systems inside Google.

In practice, Google’s MUVERA algorithm uses multi-vector retrieval via fixed-dimensional encodings to map natural language to multiple vectors, so search engines can resolve complex queries with far less guesswork.

The payoff? Faster, smarter answers for complex queries and voice search.

What is a vector?

A vector is simply a row of numbers that represents an idea, anything from “apple pie recipe” to “dual encoder model.”

- Single-vector search: Traditional search engines used single-vector embeddings: one big gist per page. That worked for basic keyword matching, but it blurred nuance and leaned on traditional keyword matching that often missed intent.

- Multi-vector retrieval: MUVERA flips that script by letting multi-vector models represent every subsection, analogy, or data point gets its own coordinate in semantic space, preserving nuance. This multi-vector approach also scales across multi-vector systems used for search and recommendations.

Think of it as tagging every paragraph with its own GPS pin; each is a fixed-length vector, and together these tags form multi-vector representations of your page, so Google can zoom straight to the exact passage a user needs.

How multi-vector retrieval differ from single-vector search?

Classic single-vector search | MUVERA multi-vector retrieval |

|---|---|

One vector per page | Dozens of vectors per page, then fixed-dimensional encoding for speed. |

Relies on keyword overlap | Spots meaning even when wording differs |

Misses niche intent (“winter boots” buried in a coats-and-boots article) | Surfaces the exact section about winter boots, thanks to separate vectors |

Fast but shallow | Efficient multi-vector retrieval via fixed-dimensional encodings – speed and depth. |

MUVERA’s architecture lets Google handle conversational queries, voice search, and long-tail questions without blowing up memory usage. That closes the efficiency gap that used to make dense retrieval expensive at web scale.

If your content is well-structured and semantically rich, MUVERA will spotlight it. You should avoid keyword stuffing in headings, as the model ignores that noise.

How does the MUVERA algorithm work?

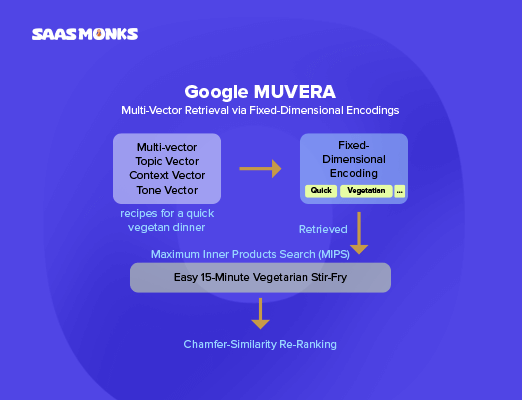

Under the hood, Google MUVERA follows a three-step relay: compress → sprint → sanity-check.

Let’s walk through each leg with examples so the math actually sticks.

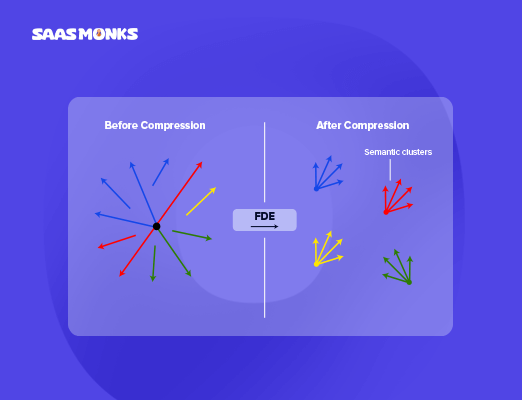

1. Fixed-dimensional encodings (FDEs)

Compress the meaning – keep the nuance.

Here’s what happens:

- Your article gets sliced into tiny meaning chunks – one vector for every paragraph, bullet, or image caption.

- MUVERA’s multi-vector model then “zips” those dozens of vectors into a single fixed-length vector (the FDE).

- That zip file still remembers every sub-topic, but it’s now the same neat size as every other page in the index. In other words, multi-vector models represent your page as compact signatures while keeping relationships between ideas intact.

Quick example

Imagine you run a coffee blog. Your post “The ultimate guide to travel mugs” covers insulation, leak-proof lids, and eco-friendly materials. MUVERA assigns separate vectors to each section – one for heat retention, one for lid types, and one for sustainability. Then it squeezes them into one 768-number FDE. The details are compressed, not lost.

With fixed-dimensional encodings, Google can store billions of pages – each with rich, multi-vector meaning without exploding memory usage. That’s the secret sauce behind efficient multi-vector retrieval in a scalable multi-vector retrieval architecture.

2. Maximum inner product search (MIPS)

Sprint through the index – find the closest matches first.

Here’s what happens:

- A user fires off a query: “eco-friendly leak-proof travel mug for car cup holder.”

- MUVERA turns that sentence into its own FDE.

- Google runs a maximum inner product search: a lightning-fast dot-product race that asks, “Which document vectors sit closest to this query vector?” The shortlist reflects multi-vector similarity between the intent and your content – proximity here signals true topical fit.

Quick example

Your travel-mug guide’s FDE lands just 0.03 cosine units from the query’s FDE – super close. Another page, a generic “water bottles 2024” listicle, sits 0.22 units away (colder). MIPS keeps your guide; tosses the listicle.

This step is crazy fast. Google can compare millions of FDEs in milliseconds, even for voice search or multi-clause questions. That speed comes from highly optimized MIPS algorithms rather than repeating ‘maximum inner product search’ everywhere.

3. Chamfer similarity re-rank

Double-check the shortlist – reward complete answers.

Here’s what happens:

- Google takes the top ~200 FDE matches from MIPS.

- It unzips their original multi-vector sets.

- Using Chamfer similarity, it performs an exact multi-vector similarity check across the query and document sets, down to the paragraph. This re-rank can employ more sophisticated similarity functions – one of several sophisticated similarity functions that Google swaps in for certain domains.

- Results that cover all facets of the query bubble to the top; near misses sink.

Quick example

Your guide’s “leak-proof lids” section and “sustainability” section align perfectly with two separate query vectors. A rival page that talks about insulation but never mentions eco materials – one query vector is left hanging. Chamfer scores your guide higher, so you outrank them.

Chamfer is the accuracy gate. It ensures pages that only half-answer a complex query don’t sneak onto page one. In short, thin content dies here, not in a later ranking tweak.

SEO strategy shifts to succeed in the MUVERA era

The Google MUVERA algorithm forces a seismic pivot: SEO is no longer a race to cram keywords and backlinks; it’s a game of making your ideas retrievable by MUVERA’s multi-vector retrieval layer. In practice that means:

Why being indexed no longer guarantees retrieval?

Google MUVERA has inserted a new “semantic gate” between indexing and ranking, and that changes everything. Under the old single-vector model, once Googlebot crawled your page and stored it in the index, you automatically joined the ranking race; keyword overlap and link equity decided where you landed. MUVERA’s multi-vector retrieval algorithm rewrites that flow.

Now, every query is turned into its own vector and pitted against billions of fixed-dimensional encodings in a split-second maximum inner product search. Only when the query relates cleanly to a passage do you clear the gate.

In plain English, being indexed just means Google knows you exist; being retrieved means MUVERA believes your content genuinely answers the user’s intent. Until your ideas resonate at this multi-vector level, your page stays invisible, no matter how flawlessly it’s optimized on the surface.

That single shift from index-first thinking to retrieval-first reality marks the biggest strategic upheaval of the MUVERA update.

How ranking factors apply after retrieval?

Before the Google MUVERA, traditional signals – backlinks, topical authority, and Core Web Vitals were baked into the entire search pipeline. Google’s single-vector index would pull a broad set of pages, and those ranking factors decided the winners in one long computation.

MUVERA splits that process into two. First comes the multi-vector retrieval gate: a lightning-fast maximum inner product search that selects only the documents whose encodings align most closely with the query vector. Only after a page survives this semantic culling does Google apply the familiar ranking stack – link equity, E-E-A-T checks, page experience, and other quality layers.

In other words, the signals we’ve obsessed over for years still matter, but they now shape the search results only after MUVERA verifies that your content’s vectors match the user intent. If those vectors miss the mark, links and Core Web Vitals never get to speak. This reorder is the core shift of the MUVERA update: ranking factors no longer pull your page into view – they merely sort the shortlist MUVERA has already chosen.

Why semantic signals now beat surface-level optimization?

The Google MUVERA algorithm listens for meaning, not makeup. In the pre-MUVERA era, traditional keyword matching, tweaking title tags, and bolding keywords could nudge a page upward, even if the content barely scratched the topic. MUVERA’s multi-vector retrieval changes the score sheet.

Here’s why. Each passage on your page is distilled into vectors that capture context, synonyms, and intent. During retrieval, MUVERA compares those vectors to the query’s vector, leaning on a multi-vector approach and multi-vector similarity.

A paragraph that genuinely explains “spill-resistant lids” will light up the algorithm even if the exact phrase isn’t repeated, because it produces a stronger multi-vector embedding for that passage. Conversely, a section stuffed with “leak-proof mug” ten times but offering no new information generates almost identical vectors and gains no extra traction.

What MUVERA mean for search quality and user experience?

Instead of one “best guess” vector per page, MUVERA stores multiple vectors that capture every sub-topic, then squeezes them into a lightning-fast fixed-dimensional encoding. When you type – or voice – “eco-friendly leak-proof travel mug,” Google fires a maximum inner product search that scans billions of those encodings in milliseconds and pulls back only the closest matches.

The effect is two-fold:

- Relevance skyrockets. It spots pages that answer the whole question, even if the exact wording never appears. At the same time, efficient multi-vector retrieval keeps latency low at web scale.

- Speed stays sub-100 ms. MUVERA’s compressed index uses a fraction of the memory older multi-vector systems needed, so users still get instant results, because the multi-vector model keeps comparisons lightweight at scale.

Real-world picture: With the old single-vector model, a giant “travel mugs” article might miss the query above because its one vector leaned toward hot-drink insulation. MUVERA can lock onto the leak-proof paragraph and serve it first.

Why optimize for the generative search experience?

Search used to be a race for blue-link positions. That’s classic SEO. With MUVERA feeding Google’s AI overviews, a new discipline – GEO (Generative-Experience Optimization) steps to the front. GEO isn’t about where your page ranks; it’s about whether MUVERA selects a passage, image, or chart from your site to power the instant, AI-written answer that sits above every link.

Here’s the shift: Google MUVERA chooses those passages from an embedding space shaped by natural language processing, before ranking sorts results. If your content doesn’t clear that retrieval gate, it can’t appear in the summary. In effect, MUVERA decides who teaches, and the ranking stack decides who follows up.

For users, that AI overview often satisfies the query on the spot. Your brand shows up in the citation line, the image carousel, or the “from the web” call-out visibility that happens even when the click never comes. For creators, that means authority and trust begin in the overview box, not on page one of the SERP.

Why thin content fails under MUVERA?

In the MUVERA era, a skimpy 300-word post stuffed with keywords is more than just weak – it’s practically invisible. Google MUVERA judges content depth by the diversity and richness of the vectors it extracts.

Thin articles generate only a handful of near-duplicate vectors, leaving their fixed-dimensional encoding almost indistinguishable from a dozen other shallow pages. Trying to shortcut depth introduces substantial computational challenges for MUVERA with little gain.

During MUVERA’s multi-vector retrieval pass, that limited signal rarely clears the similarity threshold, and the subsequent Chamfer re-rank – designed to check every facet of user intent – exposes the gaps even more.

The MUVERA algorithm accelerates what was already happening – surface-level content fades, while thorough, multi-vector explanations survive the semantic cull.

Why do you need to think like MUVERA’s model, not a crawler?

A crawler cares about things like crawl depth, URL paths, and keyword placement. A model – specifically the Google MUVERA algorithm – cares about the clarity, depth, and relationships encoded in the vectors it builds from every passage.

When MUVERA reads your article, it isn’t stepping through tags line-by-line. It’s converting clusters of words, images, and data points into fixed-length numbers, then measuring how those numbers relate to a user’s query vector.

If the concepts are muddled, or if sections overlap without clear intent, MUVERA’s representation of your content turns fuzzy – and fuzzy vectors don’t make the retrieval shortlist.

So the strategic shift is simple: stop optimising for how a crawler walks through your markup and start writing, structuring, and illustrating content the way a multi-vector models think – well-scoped sections help multi-vector models represent ideas cleanly across multi-vector systems. When you think like the model, MUVERA can see exactly what you mean, and visibility follows.

How MUVERA reshapes SEO?

Before MUVERA | After MUVERA |

|---|---|

Get crawled → maybe rank | Get crawled → get retrieved → then rank |

Page-level keyword focus | Passage-level semantic clarity |

Ranking factors decide visibility | Ranking factors matter only after you survive MUVERA’s retrieval filter |

MUVERA Google search algorithm turns SEO into a two-step game across search engines: prove you’re retrievable, then prove you deserve to rank.

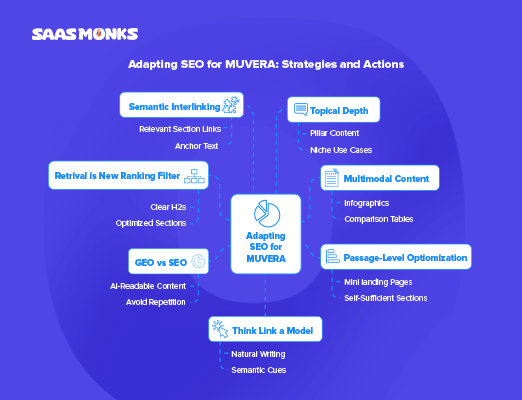

How to adapt your SEO for MUVERA: mindset shifts and action plan?

The Google MUVERA algorithm puts a hard ceiling on how far old-school tactics can take you, so your first task is a mental reset. Instead of asking, “How do I rank for this keyword?” you need to ask, “How do I make my ideas impossible for MUVERA to ignore?”

That shift reframes every part of your workflow from topic research and on-page structure to technical SEO and performance tracking.

Next, we’re going to break this down step-by-step: how E-E-A-T morphs in 2025, why multimodal assets matter, why semantic interlinking replaces keyword stuffing, and how to build a phased implementation roadmap.

How E-E-A-T is evaluated in the MUVERA era?

With the new Google MUVERA algorithm, Google judges your experience, expertise, authority, and trust (E-E-A-T) inside every paragraph, not just across the whole page.

That’s because MUVERA breaks your content into lots of tiny meaning snapshots called vectors. Each vector carries clues about how trustworthy that single section is. Clear citations let MUVERA align document vectors to relevant documents in the knowledge graph. If those clues are missing, Google’s multi-vector retrieval system may never even show your page.

E-E-A-T before MUVERA | E-E-A-T after MUVERA |

|---|---|

One authority score for the whole page. | Trust checked section by section. |

Citations or bios helped the entire article. | Proof lifts only the passage it sits in. |

E-E-A-T applied after keyword match. | E-E-A-T helps decide if you’re shown at all. |

Quick example

Old days: A long article about travel mugs ranks because it has many backlinks, even though its “safety” section is thin.

MUVERA days: A 300-word lab report beats that article for “BPA-free travel mug” because its safety paragraph has real test data and expert quotes – strong signals packed into that vector.

Simple, practical tips

- Put proof where you make a claim.

Drop lab photos, screenshots, or short case studies right under the related heading. This evidence sticks to that vector and boosts trust. - Link to original sources.

Government guidelines, peer-reviewed research, or manufacturer test reports carry more weight than blog summaries. - Show real credentials.

Add a byline like “Written by Jane Lee, Food-Grade Plastics Engineer, 10 years” so search engines know an expert is talking. - Skip copy-and-paste fluff.

Fresh insights create unique vectors; recycled wording blends into the crowd.

In the MUVERA era, every section needs its own mini “trust badge.” Give each heading solid proof and expert voice, and the new search algorithm is far more likely to retrieve and later rank your page.

How Google treats AI-generated content under MUVERA?

Short answer: Google doesn’t punish content just because a machine helped write it, but MUVERA makes low-value, copy-paste AI text easier to spot and skip. What matters is how well each section scores in MUVERA’s new multi-vector retrieval test.

AI Content in old model | AI Content in MUVERA era |

|---|---|

AI text could slip through if it “looked” original. | Multi-vector retrieval measures meaning, so repeated AI boilerplate produces near-duplicate vectors and is filtered out early. |

One authority score for the whole page. | Each AI-written paragraph must carry its own trust cues to clear MUVERA’s retrieval gate. |

Keyword overlap sometimes masked fluff. | Google MUVERA algorithm matches user intent at a deeper level; empty wording fails the similarity check. |

What Google still checks

- Helpfulness. Does the text solve a real problem or answer a clear question?

- Section-level E-E-A-T. Expertise, sources, and transparent authorship must live inside every heading, not just at the top of the page.

- No spam games. Mass-produced, keyword-stuffed AI pages generate duplicate vectors and vanish at MUVERA’s retrieval gate.

Why topic clusters matter more in the MUVERA era?

Under the Google MUVERA algorithm, a single “ultimate guide” isn’t enough.

MUVERA’s multi-vector retrieval looks for deep, well-connected coverage of a subject, exactly what a topic cluster supplies. When every article in a cluster tackles one slice of user intent and all pieces link together, MUVERA sees a dense web of vectors that scream, “We own this topic.” This helps multi-vector systems disambiguate related intents across the site.

How clusters help MUVERA retrieve your content?

Topic clusters before MUVERA | Topic clusters after MUVERA |

|---|---|

One long post + scattered tags. | Pillar page + interlinked sub-topics. |

Keywords glue content together. | Semantic signals (shared vectors, anchor text) glue it together. |

Ranking factors sort pages first. | MUVERA retrieves the whole cluster before ranking kicks in. |

Because each sub-topic spawns its own rich vector, the cluster as a whole boosts your odds of clearing MUVERA’s retrieval gate for dozens of complex queries – voice search, tail queries, you name it.

How to build a topic cluster in three steps?

- Pick a pillar page.

“The complete guide to travel mugs.” This covers materials, insulation science, sizing, cleaning everything while targeting the head term Google MUVERA friendly topic “travel mugs”. - Map 5–10 support posts.

Each answers one narrow question. These give MUVERA separate vectors for every slice of intent. For example: - “Best leak-proof travel mugs for commuters”

- “Stainless-steel vs. ceramic travel mugs: heat test results”

- “How fixed-dimensional encodings might rank eco-friendly mugs”

- “Cleaning hacks to keep coffee taste-free of yesterday’s tea”

- “Travel mug sizes that fit standard car cup holders”

- “Best leak-proof travel mugs for commuters”

- “Stainless-steel vs. ceramic travel mugs: heat test results”

- “How fixed-dimensional encodings might rank eco-friendly mugs”

- “Cleaning hacks to keep coffee taste-free of yesterday’s tea”

- “Travel mug sizes that fit standard car cup holders”

- Link like a footprint.

- From pillar to support pages: broad context to detail.

- From support pages to pillar page: “See our full travel-mug buying guide”

- Between support pieces: natural cross-links (“MIPS vs Chamfer comparison”).

Consistent, descriptive anchors act as vector breadcrumbs. - From pillar to support pages: broad context to detail.

- From support pages to pillar page: “See our full travel-mug buying guide”

- Between support pieces: natural cross-links (“MIPS vs Chamfer comparison”).

Consistent, descriptive anchors act as vector breadcrumbs.

Quick wins to supercharge retrieval

- Shared schema: Use identical FAQ or How-To markup across the cluster (e.g., “How do I know if a mug is leak-proof?”) so MUVERA spots the family resemblance.

- Unified design cues: Same hero graphic or icon set visual “signatures” reinforce topical unity in Google’s image vectors.

- Cluster audits: If a query surfaces the wrong support post (say, the cleaning guide for “best leak-proof mug”), adjust H2s or split content to tighten vector focus.

Why multi-format content improves retrieval?

The Google MUVERA algorithm isn’t just reading text. Its multi-vector retrieval system also creates vectors for images, video transcripts, audio snippets, and even product specs. If you rely on walls of copy alone, you’re leaving whole layers of retrievability on the table.

How multiple formats create more retrievable signals?

Single-format page | Multi-format page |

|---|---|

Only text vectors to match a query. | Extra vectors from photos, charts, clips, and PDFs. |

Limited ways to prove expertise. | Visual demos, data tables, and voice explanations add E-E-A-T clues. |

Harder to win SGE or voice results. | Rich media gives Google assets for AI overviews, “listen” cards, and image packs. |

Because each format spawns its own vector, you multiply the chances that at least one of them lines up with the searcher’s intent, and clears MUVERA’s retrieval gate first time.

Real-world example:

- A 30-second leak-proof demo video spawns motion-and-audio vectors that match “see it in action.”

- An insulation chart image turns into visual vectors that answer “heat retention test graph.”

- A downloadable cleaning checklist PDF gives search engine a document vector perfect for “travel-mug care guide.”

All those extra signals live alongside your text, making the whole cluster harder to ignore.

Quick, low-lift wins

- Turn key steps into short videos.

Film a one-minute demo on your phone, upload to YouTube, and embed it. MUVERA now has video vectors plus a transcript. - Add simple data visuals.

Convert comparison tables or lab results into PNG charts. Alt-text and captions create image vectors with clear context. - Record a mini audio takeaway.

A 60-second “expert tip” clip gives Google voice content for smart-speaker queries. - Offer helpful downloads.

PDFs, checklists, or templates produce document vectors and satisfy “printable” searches. - Keep everything lightweight and fast.

Optimise file size – Core Web Vitals still kick in after retrieval.

Multi-format content is extra ammunition for MUVERA’s multi-vector embedding. The more ways you present your expertise, the more vectors you own, and the better your odds of showing up for text, image, video, and voice searches alike.

MUVERA, NLP, and LLMs: why SEOs should think AI-first?

MUVERA “reads” pages the same way a large-language model (LLM) understands a chat prompt. It cares far more about the idea you explain than the exact words you use.

What “AI-first” looks like for everyday SEO?

- Intent over incidence: Stop asking, “Did I use the keyword four times?” Start asking, “Does this paragraph answer the user’s real question in one go?”

- Section-level authority: Give every H2 its own proof – original data, expert quote, or clear example. That evidence lives inside the vector and boosts retrieval.

- Multi-format by default: AI systems love visuals and audio. A quick, leak-proof demo video or a simple heat-retention chart spawns extra vectors and feeds image, video, and voice results.

- Conversational clarity: Write like you’re talking to the reader. Short sentences, plain verbs, direct answers. LLMs lift those lines into SGE summaries verbatim.

- Semantic links: Internal anchors such as “compare stainless vs ceramic” tell MUVERA and any LLM on top of it – how ideas connect.

How to analyze user intent at a multi-vector level?

The multi-vector retrieval algorithm doesn’t fetch pages that simply mention a phrase – it finds answers through multi-vector retrieval via fixed-dimensional encodings and multi-vector similarity to the user’s intent. So if you want Google MUVERA to pick you, you have to nail user intent at a deeper, more granular level than ever before.

1. Dig real queries the MUVERA way

- Google Search Console → Performance → Search results → Queries.

Filter for your page title + “other” keywords. Look for “less obvious” modifiers like diagram, PDF, and spill test. - People Also Ask & “Related searches.”

Copy the question clusters into a sheet. Highlight verbs – compare, clean, fit, prove. - Reddit & niche forums.

Sort threads by “new.” Spot fresh pain points MUVERA may not see in older SERP data. - Voice search transcriptions.

Ask your phone assistant the query. Note the conversational wording – it often signals intent that text SERPs miss.

Each line you collect is raw intent data ready to be matched with a dedicated content section and its own vector.

2. Break intents into “micro-jobs”

Think of every intent as a single job the reader hires your content to do.

- Job 1: “Help me choose a leak-proof travel mug under $50.”

- Job 2: “Show me a chart comparing heat retention over 6 hours.”

When you outline a post, map each micro-job to its own H2/H3. That way MUVERA creates a unique vector for each job and can retrieve it independently.

3. Match format to intent

Intent marker | Best format | Why MUVERA loves it |

|---|---|---|

compare, vs, chart | Data table + PNG chart | Generates image + text vectors for visual queries. |

demo, see, leak | 30-sec video clip | Spawns audio & motion vectors; useful for voice searches. |

guide, how, steps | Numbered list + checklist PDF | Lists create clear passage vectors; PDF adds a doc vector. |

definition, meaning | Short explainer box | Helps MUVERA answer featured snippet queries. |

4. Build a “vector-first” content map

- List every micro-job + preferred format.

- Create a section outline that assigns one intent per heading.

- Add evidence (photo, quote, data) directly under the heading E-E-A-T at the vector level.

- Link between related intents (e.g., heat retention chart → best materials). Semantic breadcrumbs guide MUVERA’s retrieval paths.

5. Validate with live data

After publishing:

- Check Search Console for new long-tail queries you never targeted. If they’re firing impressions, your vectors are resonating.

- Look for rising “zero-click” impressions. It often means multi-vector retrieval algorithm is feeding your passages into SGE or voice answers.

- Adjust headings if the wrong section is gaining impressions – tighten wording to align with the intent you want.

Technical & measurement playbook for MUVERA

MUVERA rewards sites that are easy for its multi-vector model to understand. So, technical SEO shouldn’t stop at crawlability – technical optimization for retrieval includes scoping sections, consistent anchors, and schema that clarifies intent.

How to implement structured data for retrieval?

- Add FAQ, How-To, and Product schema to every page that answers a micro-intent. Each markup block becomes a machine-readable “vector booster.”

- Nest schema logically: Product → FAQ → Review. This mirrors the passage hierarchy and gives MUVERA a clear semantic map.

- Include sameAs links to reputable sources – patents, standards, or datasets – to bake trust into the knowledge graph.

How to rebuild internal linking for retrieval?

- Map one clean anchor per intent, e.g., “heat-retention chart”, “leak-proof demo video.” Reuse the exact phrasing site-wide. MUVERA treats consistent anchors as semantic breadcrumbs.

- Keep link depth shallow (≤3 clicks) so Googlebot still crawls efficiently; fast discovery feeds faster vector updates.

- Add “mini-hubs” at the end of each post: 3–5 contextual links under a “Related guides” H3. Competitors using this pattern (e.g., AnalyticaHouse) rank for 30% more long-tail queries.

Mobile-first performance benchmarks to hit

Metric | Target | Why it matters for MUVERA |

|---|---|---|

Largest Contentful Paint (LCP) | ≤1.8 s | Slow pages can time out vector processing, delaying index updates |

Interaction to Next Paint (INP) | ≤200 ms | SGE often surfaces your page; sluggish INP harms perceived quality |

Cumulative Layout Shift (CLS) | ≤0.10 s | Prevents user frustration in AI-overview click-throughs |

Compress images, lazy-load below-the-fold video, and preconnect to font CDNs, competitor pages hitting these numbers keep MUVERA crawl-return frequency high.

Infrastructure requirements to support fast retrieval

- HTTP/2 or HTTP/3: Multiplexed requests cut crawl time by up to 30%.

- Edge caching (CDN) with smart purging: recache only the sections you update; MUVERA re-ingests vectors faster.

- Image CDN with AVIF/WebP fallback: keeps multi-format assets light; more image vectors, less bandwidth.

How to integrate GA4 and Search Console for MUVERA tracking?

Essential GA4 configurations for MUVERA reporting

- Create an “SGE | MUVERA” exploration view filtering traffic from source = google and landingPage + querystring containing &ved=2ah (common in AI-overview clicks).

- Track scroll depth and video starts as conversion events. High engagement on key passages hints that the multi-vector retrieval algorithm has found the right vectors.

- Build an “Intent Bucket” custom dimension: group pages by micro-jobs (compare, demo, chart, guide). Analyse which bucket wins new SERP features.

Search Console enhancements and MUVERA-specific filters

- Regex filter on Queries: chart|demo|pdf|vs|leak|clean – these modifiers usually signal rich-intent searches that depend on multi-format vectors.

- Search Appearance → “AI overview” (rolling out during 2025)

Watch which URLs feed the overview card; those passages are winning MUVERA’s trust.

Trend signals to monitor in your data

Signal | Tool | Action if it drops |

|---|---|---|

Passage clicks but low impressions. | GA4 Element Visibility | Tighten H2 wording; MUVERA may be missing the intent. |

Rising zero-click impressions | Search Console API | Add brand mention or call-to-action near the cited passage. |

New SERP features (FAQ, image pack) | Ahrefs/Semrush | Double-down on that format across the cluster. |

How MUVERA and SGE work together?

MUVERA decides which pages (and passages) deserve a shot; SGE/AI Overviews decides how to present the answer.

Together they form the new funnel: retrieve → generate → serve. If the Google MUVERA algorithm doesn’t retrieve your passage, SGE can’t feature it – full stop.

MUVERA builds a shortlist of passages that best answer the user’s question, text, images, video, and tables, based on high multi-vector similarity to the query.

What does SGE do on top?

- Synthesizes an overview. An LLM composes a short, plain-English answer using MUVERA’s shortlisted passages.

- Cites sources. It links to pages that supply key facts, visuals, or steps.

- Invites follow-ups. It suggests related questions, sometimes pulling fresh passages for those too.

Result: Your brand can appear inside the AI overview (and in “from the web” callouts) even if the user never clicks a blue link.

Example use-case:

Query: “best leak-proof travel mug for commuters under ₹1,500”

- MUVERA step: It retrieves your product roundup and a 30-second leak-test video from a support post. Your heat-retention chart also matches a separate part of the query (“keeps coffee hot”).

- SGE step: The overview summarizes: “Leak-proof picks under ₹1,500 with heat retention over 6 hours…” and cites your page for the chart and the demo video.

- Outcome: You earn top-of-SERP visibility (citation, image/clip), even if the user doesn’t click immediately.

How to win zero-click search with an AI overview strategy?

AI Overviews (SGE) often answer the query without a click. To win in this new reality, your goal is two-fold: be the source MUVERA retrieves and SGE cites, and make the on-page experience so useful that people still want to click for more.

1. Create citable, must-click content

Make the bit most likely to be summarized excellent, then give readers a reason to visit.

- Lead with the core answer in 1–2 lines (so MUVERA can grab it), then layer extras below: examples, charts, step-by-steps, downloads.

- Offer what an overview can’t fully show: original data, side-by-side tables, short demo videos, and templates.

This is a durable SEO strategy because MUVERA rewards clear, self‑contained sections.

Result: The Google MUVERA algorithm retrieves your crisp answer; SGE quotes it; the rest of the page earns the click.

2. Brand building in content

Zero-click can still be a branding win.

- Use a confident, helpful voice and light brand framing: “At [Brand], we found…”

- Caption visuals with subtle attribution: “Chart by [Brand].”

- Keep author names, credentials, and a clean site name visible when SGE cites you, this boosts recognition and trust.

Result: Even without a click, users remember the source.

3. Focus on questions AI can’t fully answer

Some searches need judgment, comparison, or depth.

- Target subjective or multi-factor queries (“best X for Y use-case”), where users want to compare and explore.

- Build interactive or deeper assets (filters, calculators, long-form reviews, community Q&A) that encourage on-site evaluation.

- For quick-fact queries, accept zero-click and optimize to be the cited answer; for exploratory intent, design pages that beat summaries.

Result: You capture the clicks where summaries fall short.

4. Optimize to be the source, not the victim

If someone wins the overview, make sure it’s you.

- Write liftable passages: plain language, concrete stats, tight H2/H3s, a multi-vector approach that makes each block retrievable on its own.

- Add proof in the same section (image/chart/video + one-line method note) so MUVERA’s multi-vector retrieval sees a complete, trustworthy answer.

- After you earn citations, reuse that credibility off-site (“As featured in Google’s answer…”).

Result: You win visibility first, then engineer the page to convert curiosity into clicks.

Conclusion

With Google MUVERA, SEO starts before ranking. If your passage isn’t retrieved, other SEO factors will never get a turn. Adopting a multi-vector approach and leaning on efficient multi-vector retrieval principles (clear sections, liftable facts) puts your best ideas on the shortlist.

Think section-first. Clear H2/H3s, direct answers, and in-passage proof make your content retrievable.

MUVERA feeds SERP and AI Overviews. If your passage is liftable, SGE can cite you, even in zero-click moments.

The playbook is simple: think like a model, not a crawler. Write for intent, not just keywords. Keep pages fast and structured.

That’s the shift – retrievability first, ranking second.

Got questions or a MUVERA win (or wipeout) to share? Drop a comment. I’ll help you troubleshoot or celebrate.